As I wrote in a previous article, I'm using Borgbackup to back up some servers and workstations. And most of the time it's going OK. Unfortunately, when it's not OK, it can be very (very) hard to recover. Spoiler alert: one of my backup repositories had a problem, it took me 16 days and 1131 connections to the repository to fix it.

Few weeks ago, I've noticed that the Borg repository for the backups of my MacBook Pro was holding way too many backups. The pruning process was not doing its job properly. So I’ve started to investigate, and discovered that every attempt to prune older backups failed.

Trying to check the repository failed too: every single time the connection was reset. Apparently the remote server was dropping the SSH connection after a while (between 20 and 90 minutes). Client-side the error message was Connection closed by remote host.

I started to wonder if the problem was the very large number of backups, so I tried to delete backups manually. Some of them were easy to delete but most of the older backups needed delete --force and even delete --force --force, which is pretty bad.

Basically, I destroyed two years worth of backups, but it was still impossible to check and repair the repository. Also, the use of --force and --force --force makes it impossible to reclaim the space until you repair the repository.

Digging in the (mis)direction of a connection problem I’ve tried everything I could. It failed every single time:

- tune

ClientAliveIntervalandClientAliveCountMaxserver-side - tune

ServerAliveIntervalandServerAliveCountMaxclient side - using and not using multiplexed ssh connections

- moving the client to another physical network

- using

caffeinateto launch the borg command - using Amphetamine

- upgrading ssh on the client

- upgrading borg

- giving mosh a try (nope)

- running ssh with -vvv

- using ktrace server-side to find the cause

None of this helped at all. Not to find a cause, not to make it work properly.

Eventually I stumbled upon this blog post: Repair Badly Damaged Borg Repository.

The repair method described failed too:

- step check --repair --repository-only worked

- but check --repair --archives-only died with the same Connection closed by remote host.

As a workaround attempt I’ve scripted a loop that would do check --repair --archives-only on every archive individually (~630).

After 3 full days and more than 600Go transferred from the repo to the client, every archives were checked and none was faulty.

Next step was to attempt check --repair again on the whole repository. About 15 hours later the output was

2851697 orphaned objects found! Archive consistency check complete, problems found.

Full working solution for me:

export BORG_CHECK_I_KNOW_WHAT_I_AM_DOING=YES

borg check -p --repair --repository-only LOGIN@HOST:/path/to/repo

borg -p list LOGIN@HOST:/path/to/repo | awk '{print $1}' > listborg

cat listborg | while read i; do

borg -vp check --repair --archives-only LOGIN@HOST:/path/to/repo::$i

done

borg -p check --repair LOGIN@HOST:/path/to/repo

Final step was to reclaim space on the server with compact:

$ borg compact -p --verbose path/to/repo/ compaction freed about 376.22 GB repository space.

Boy that was painful!

I’m pretty sure it’s not really an ssh issue. Something is causing the connection to drop but I have ssh connections opened for days between that client and that server, so I guess ssh is not the problem here. Also, borgbackup’s github is loaded with issues about failing connections. In the end it boils down to:

- borg repositories can break badly without warning

- repairing a repo might not worth the bandwidth/CPU/IOs

I used to have a quite complicated backup setup, involving macOS Time Machine, rsync, shell scripts, ZFS snapshots,

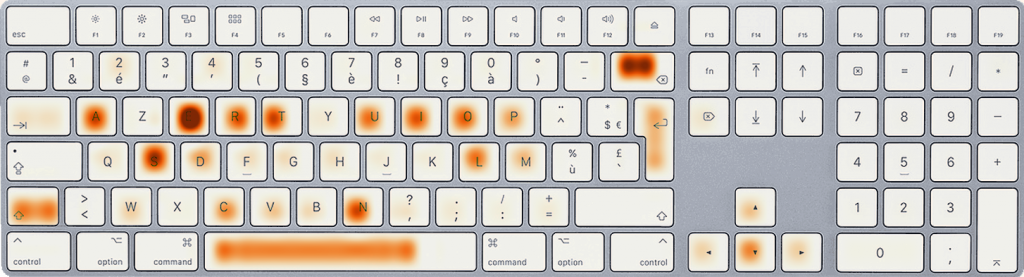

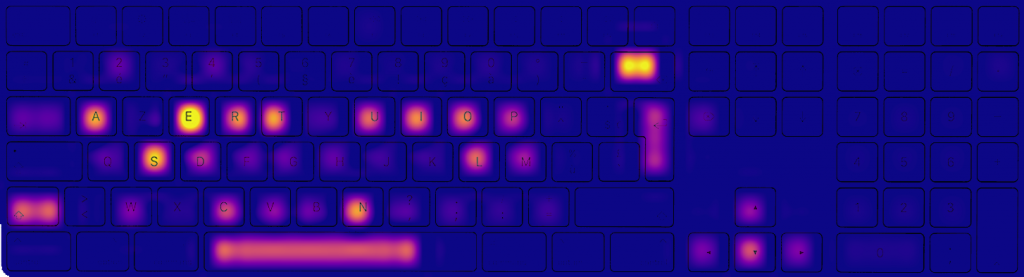

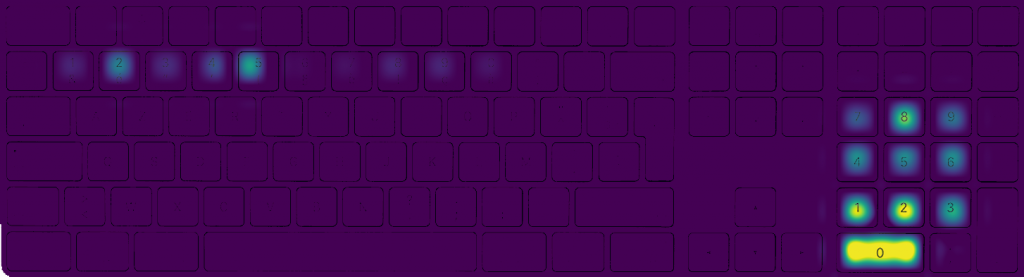

I used to have a quite complicated backup setup, involving macOS Time Machine, rsync, shell scripts, ZFS snapshots,  La seule piste viable était donc de trouver une solution libre et ouverte, dans un langage que je comprenne a minima, de sorte que je puisse modifier le programme pour l'adapter à un clavier étendu en français.

La seule piste viable était donc de trouver une solution libre et ouverte, dans un langage que je comprenne a minima, de sorte que je puisse modifier le programme pour l'adapter à un clavier étendu en français.