Few months ago I discovered that Splunk did not bother updating its forwarder to support FreeBSD 14. It’s a real PITA for many users, including myself. After asking around for support about that problem and seeing Splunk quietly ignoring the voice of its users, I’ve decided to try and run the Linux version on FreeBSD.

Executive summary: it works great on both FreeBSD 14 and 13, but with some limitations.

A user like me has few options:

- (re)check if you really need a local log forwarder (for everything that is not handled by syslog), if you don’t, just ditch the Splunk forwarder and tune syslogd to send logs to a Splunk indexer directly

- find an alternate solution that suits you: very hard is you have a full Splunk ecosystem or if, like me, you really are addicted to Splunk

- Run the Linux version on FreeBSD: needs some skills but works great so far

Obviously, I’m fine with the latest.

Limitations

You will run a proprietary Linux binary on a totally unsupported environment: you are on your own & it can break anytime, either because of FreeBSD, or because of Splunk.

You will run the Splunk forwarder inside a chroot environment: your log files will have to be available inside the chroot, or Splunk won’t be able to read them. Also, no ACL residing on your FreeBSD filesystem will be available to the Linux chroot, so you must not rely on ACLs to grant Splunk access to your log files. This latest statement is partially wrong. You can rely on FreeBSD ACLs but it might require some tweaks on the user/group side.

How to

Below you’ll find a quick&dirty step by step guide that worked for me. Not everything will be detailed or explained and YMMV.

First step is to install a Linux environment. You must activate the Linux compatibility feature. I’ve used both Debian and Devuan successfully. Here is what I’ve done for Devuan:

zfs create -o mountpoint=/compat/devuan01 sas/compat_devuan01 curl -OL https://git.devuan.org/devuan/debootstrap/raw/branch/suites/unstable/scripts/ceres mv ceres /usr/local/share/debootstrap/scripts/daedalus curl -OL https://files.devuan.org/devuan-archive-keyring.gpg mv devuan-archive-keyring.gpg /usr/local/share/keyrings/ ln -s /usr/local/share/keyrings /usr/share/keyrings debootstrap daedalus /compat/devuan01

This last step should fail, it seems that it’s to be expected. Following that same guide:

chroot /compat/devuan01 /bin/bash dpkg --force-depends -i /var/cache/apt/archives/*.deb echo "APT::Cache-Start 251658240;" > /etc/apt/apt.conf.d/00chroot exit

Back on the host, add what you need to /etc/fstab:

# Device Mountpoint FStype Options Dump Pass# devfs /compat/devuan01/dev devfs rw,late 0 0 tmpfs /compat/devuan01/dev/shm tmpfs rw,late,size=1g,mode=1777 0 0 fdescfs /compat/devuan01/dev/fd fdescfs rw,late,linrdlnk 0 0 linprocfs /compat/devuan01/proc linprocfs rw,late 0 0 linsysfs /compat/devuan01/sys linsysfs rw,late 0 0

and mount all, then finish install:

mount -al chroot /compat/devuan01 /bin/bash apt update apt install openrc exit

Make your log files available inside the chroot:

mkdir -p /compat/debian_stable01/var/hostnamedlog mount_nullfs /var/named/var/log /compat/debian_stable01/var/hostnamedlog mkdir -p /compat/debian_stable01/var/hostlog mount_nullfs /var/log /compat/debian_stable01/var/hostlog

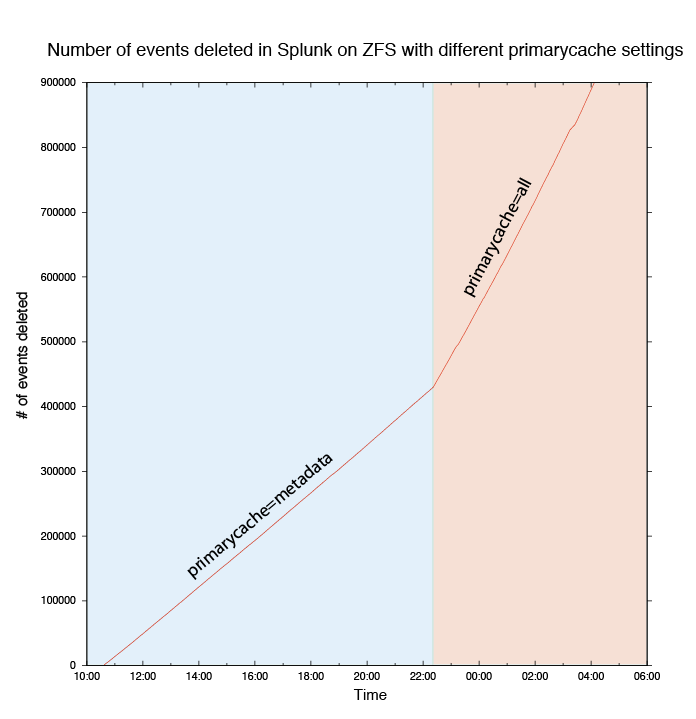

Note: /var/named/var/log and /var/log are ZFS filesystems. You’ll have to make the nullfs mounts permanent by adding them in /etc/fstab.

Now you can install the Splunk forwarder:

chroot /compat/devuan01 /bin/bash ln -sf /usr/share/zoneinfo/Europe/Paris /etc/localtime useradd -m splunkfwd export SPLUNK_HOME="/opt/splunkforwarder" mkdir $SPLUNK_HOME echo /opt/splunkforwarder/lib >/etc/ld.so.conf.d/splunk.conf ldconfig apt install curl dpkg -i splunkforwarder_package_name.deb /opt/splunkforwarder/bin/splunk enable boot-start -systemd-managed 0 -user splunkfwd exit

Note: splunk enable boot-start -systemd-managed 0 activates the Splunk service as an old-school init.d service. systemd is not available in the context of a Linux chroot on FreeBSD.

Now from the host, grab your config files and copy them in your Linux chroot:

cp /opt/splunkforwarder/etc/system/local/{inputs,limits,outputs,props,transforms}.conf /compat/devuan01/opt/splunkforwarder/etc/system/local/

Then edit /compat/devuan01/opt/splunkforwarder/etc/system/local/inputs.conf accordingly: in my case it means I must replace /var/log by /var/hostlog and /var/named/var/log by /var/hostnamedlog.

Go back to your Devuan and start Splunk:

chroot /compat/devuan01 /bin/bash service splunk start exit

To do

I still need to figure out how to properly start the service from outside the chroot (when FreeBSD boots). No big deal.

More than six months away, I've put both

More than six months away, I've put both